What Is llms.txt?

A plain-language guide to the new "map" for large-language models

TL;DR

llms.txt is a simple Markdown file you place at your site's root (/llms.txt). Inside, you list the pages, docs and policies an LLM should read first—plus clean links to Markdown versions of those pages. Think of it as robots.txt for AI context rather than crawl permission. The idea was proposed in September 2024 by AI researcher Jeremy Howard and is already being adopted by dev-tool and SaaS sites.

Why Was llms.txt Invented?

1. HTML is noisy

When an LLM fetches a page it also gets nav bars, ads, cookie banners and scripts—wasting the model's context window. llms.txt points straight to the content you want quoted.

2. Docs are scattered

APIs, policies and FAQs often sit in different sub-domains. A single index file lets an AI assistant stitch them together quickly.

3. AI answers are becoming the front door

Search engines and chatbots increasingly surface direct answers. If the bot can't find your canonical info, it guesses—or quotes a third-party blog. llms.txt reduces that risk.

How Does It Work?

/llms.txt (Markdown) │ ├─ # MyProject ← one H1 title │ > Short one-line blurb │ ├─ ## Documentation ← H2 sections │ - /docs/start.md : Quick start │ - /docs/api.md : API reference │ ├─ ## Policies │ - /terms.md : Terms of Service │ └─ ## Optional - /roadmap.md : Future plans

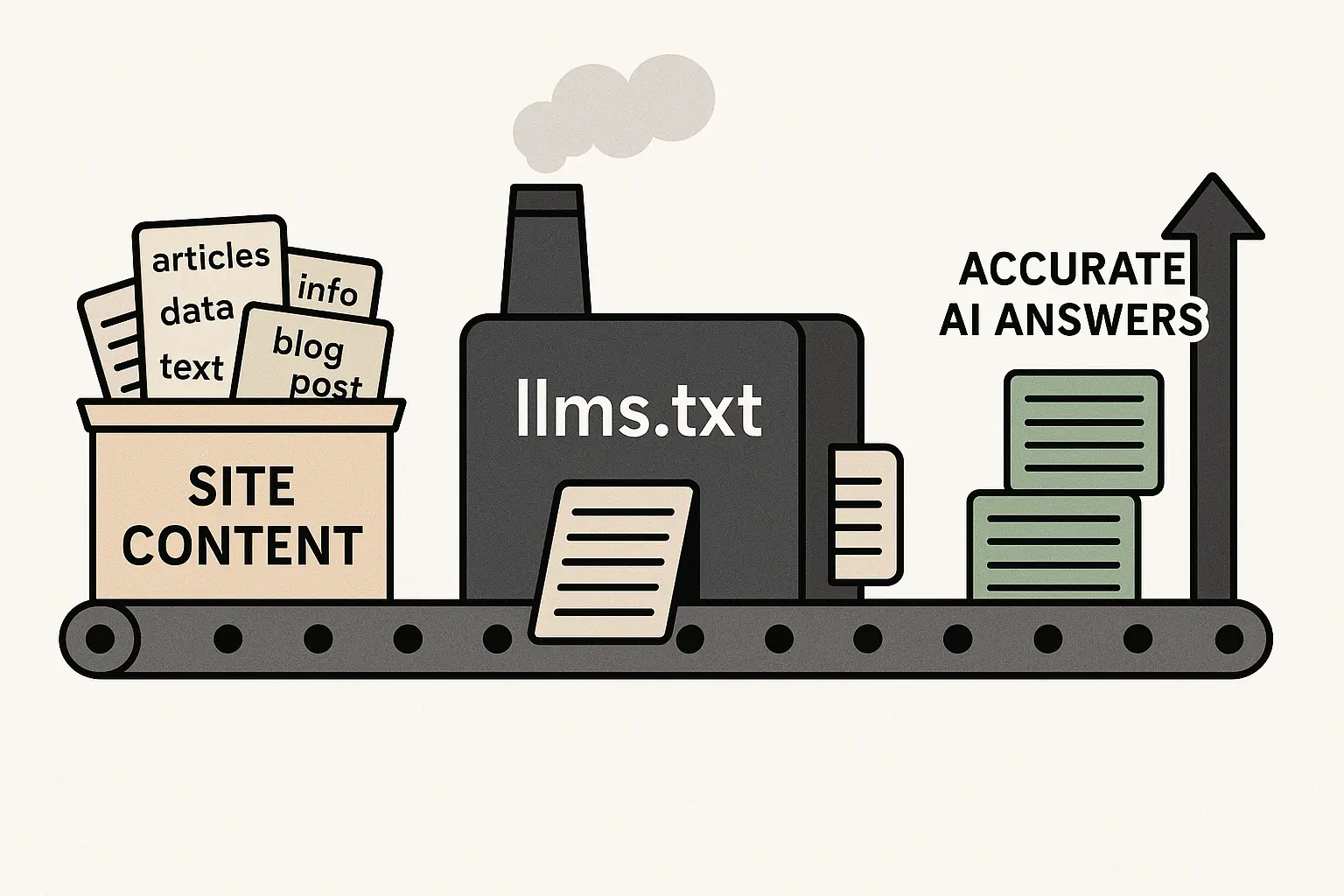

The llms.txt Process

How ContextKit transforms your website content into AI-readable format

Rules of thumb

- Only H1 for the title and H2 for sections—no deeper nesting.

- Keep it under ~8 k tokens per language so the whole file fits in an LLM prompt (this value will keep getting larger as models get better).

- Link to Markdown or plain-text versions of pages whenever possible. AI-Powered SEO • WordLift

Many sites also add an /llms-full.txt—a concatenation of all docs for models with giant context windows (e.g. Claude 100k).

How Is It Different from robots.txt?

| robots.txt | llms.txt |

|---|---|

| Tells crawlers where they may / may not go | Tells LLMs which pages matter most |

| Plain text directive syntax | Markdown outline |

| Affects training & indexing access | Optimises inference-time context |

Who's Using It Already?

- WordLift (SEO platform) saw better Gen-AI snippets after adding llms.txt. AI-Powered SEO • WordLift

- Cloudflare, Mintlify, Hugging Face and dozens of dev tools publish llms.txt files on their docs sub-domains. Mintlify

- Trade press like Search Engine Land calls it "a proposed standard for AI crawling." Search Engine Land

Should You Add One?

| If… | Then… |

|---|---|

| You have docs, pricing pages or policies people ask ChatGPT about | Yes. Curate those links in llms.txt so the bot quotes you verbatim. |

| Your site is a 5-page brochure | A mini llms.txt takes 10 min and future-proofs you. |

| You want to block AI training | Use robots.txt + OpenAI/Google extensions instead; llms.txt is for guidance, not blocking. |

Quick Start Checklist

- Create Markdown versions of key pages (or generate them with a tool like Firecrawl's free generator). AI-Powered SEO • WordLift

- Draft

/llms.txtwith the title, a one-line blurb and H2 sections. - Keep optional deep links under an

## Optionalheading. - Upload

llms.txtto your web root and add anoindexrule in robots.txt if you don't want it in Google results. - Test: fetch

https://your-site.com/llms.txtin the browser—should render as readable Markdown.

The Bottom Line

llms.txt is still young, but momentum is real. It costs almost nothing to adopt and may decide whether an AI assistant cites your authoritative answer or an outdated forum post. Adding one now is the simplest step you can take to make your site AI-ready.

Keywords to remember: what is llms.txt, llms txt file, llms.txt standard

Feel free to copy this post, tweak it, and publish your own llms.txt today.

Stop Leaving Your AI Representation to Chance

Let us create a perfect llms.txt for your site, so AI assistants quote your content accurately.